by LaGuardia professor Dr. Yun Ye

ABOUT THE AUTHOR

Dr. Yun Ye

Dr. Yun Ye joined LaGuardia in 2014 as a faculty member at the Department of Mathematics, Engineering and Computer Science.

Dr. Ye conducts research on secure and energy efficient wireless communications. She has designed course assignments and student projects to promote computational thinking across disciplines. Her assignments in a Pathways course in Scientific World, MAC100 Computing Fundamentals, enable the students to use technology to solve problems related to their studied fields.

Dr. Ye is a mentor of LaGuardia Women in Technology club. She has worked with students on tech projects including directional signal transmission, error correction coding, and underwater acoustic communication system.

Machine learning is a branch of artificial intelligence that has become pervasive in our society. The concept of machine learning was formally brought up by an IBM employee Arthur Samuel in 1959 with his research on computer games of checkers, described as “the field of study that gives computers the ability to learn without explicitly being programmed”. In 1962, a Connecticut checkers champion played with an IBM 7094 computer and lose the game. This feat is considered a major milestone in the history of artificial intelligence. The year 1997 witnessed another leap in AI technology when an IBM supercomputer Deep Blue defeated the world champion Garry Kasparov in a chess match [1]. In less than two decades later, the computer Go program AlphaGo developed by DeepMind Technologies beat a human professional player on a full-sized 19x19 board which has 10170 possible configurations, a googol times more complex compared to chess. This computer victory was chosen as runners-up in the Breakthrough of the Year 2016 by Science [2].

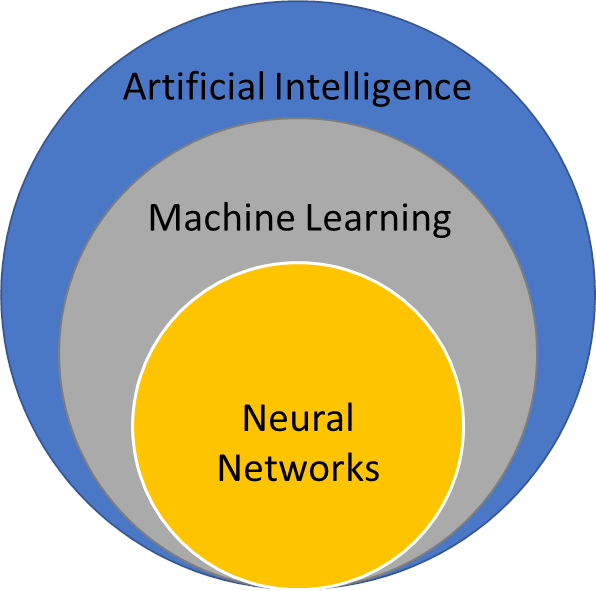

Figure 1: Relation between artificial intelligence and machine learning algorithms.

The process of machine learning is implemented by computer programs made up of algorithmic instructions. In traditional applications where the relation between input and output can be captured by explicit formulas or a fixed model, detailed instructions are created for the computer to carry out the task. For more complicated problems such as gaming and face recognition, it is too time-consuming if not impossible to design programs inclusive of all possible relations. Instead, machine learning takes the approach of making computers learn from sample data and update the relation model with each new input. Artificial neural networks constructed with numerous interconnected processing nodes are a class of commonly used algorithms to support this learning process.

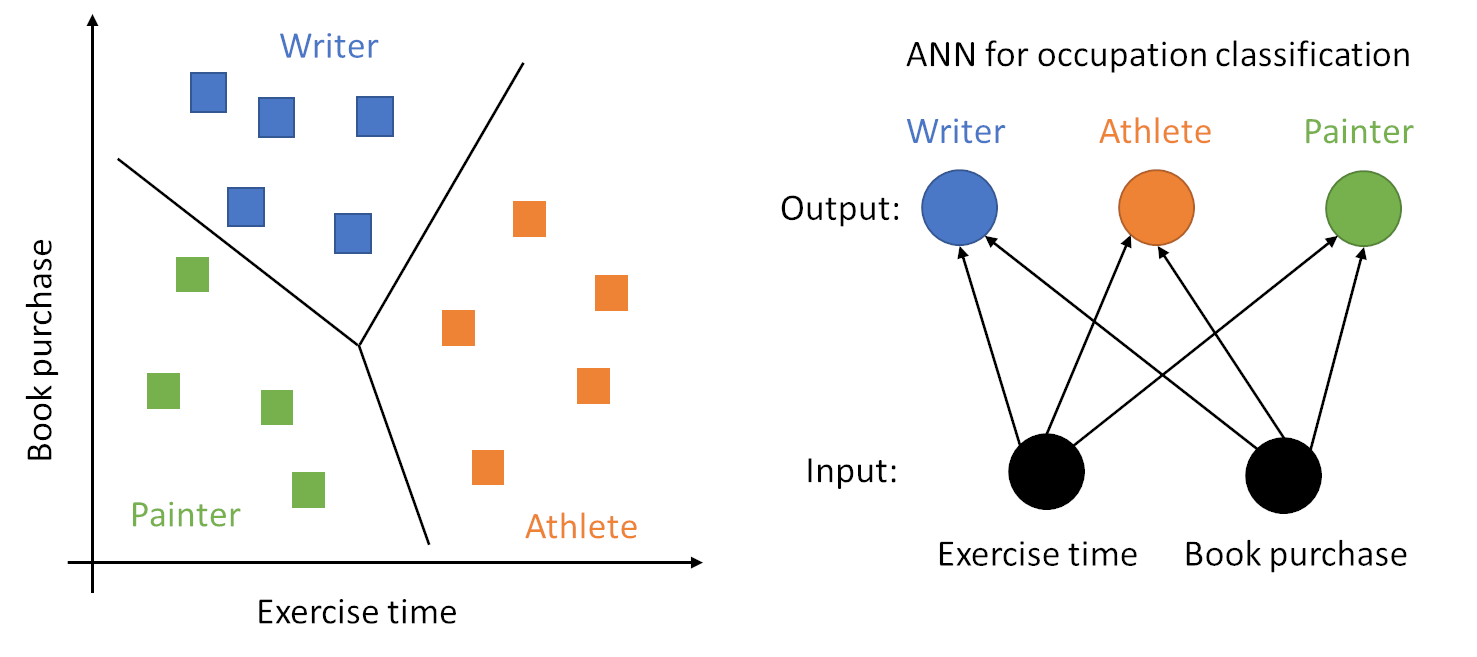

There are two types of fundamental tasks performed by machine learning algorithms, namely classification and prediction [3]. Classification labels input data as one of the classes with distinctive patterns. These classes can be specified by users in labeled datasets (supervised learning), or they can be determined by the computer program through clustering with unlabeled datasets (unsupervised learning). As a simple example, consider that the machine learning algorithm is designed to classify the type of occupation based on the subject’s behavior pattern including exercise time and book purchase. In other words, the computer program will take the behavior patterns as input and identify the subject’s occupation in the output. A training dataset containing samples of known subjects’ behavior patterns and occupations is used to build a relation model between the input and the output.

As shown in Figure 2, each sample in the training dataset (denoted by square symbols) contains an occupation label indicated by color of the square symbol, and numerical annotations of the two behavior patterns indicated by coordinates of the square symbol in the coordinate system. The three black lines dividing the samples indicate the separating criteria for the three types of occupation.

Figure 2: An artificial neural network for occupation classification.

In this ideal scenario, three occupations, i.e. writer, athlete and painter, are considered, and the samples in the training dataset can be linearly separated. An artificial neural network (ANN) with an input layer and an output layer is constructed to model the relation between the subject’s behavior and occupation. The input layer contains processing nodes (denoted by black circles) taking the numerical values of the two behavior patterns. The output layer contains processing nodes (denoted by blue, orange, and green circles) indicating the occupation type as the classification outcome. The links connecting the input layer and the output layer are the weights used to represent the direct relation between each behavior pattern and each occupation. These weights are computed using the training dataset to achieve least misclassification. After the weights are trained, the relation model is established, and accordingly new input behavior patterns without occupation information (testing data) could be associated with one occupation in the output of the ANN. In many practical applications, multiple layers with more processing nodes are included to model nonlinear relations between the input and the output [4].

While in classification the output is confined to limited known options, prediction aims to generate probable values of certain unknown variables that might be unlimited, as in weather forecast and economic growth projection. An ANN could also be constructed to model the input-output relation. In the situation when the computer program interacts with a dynamic environment and no sample data is available such as in game play and autonomous vehicle, the algorithm goes by using trial and error to establish the model, a process known as reinforcement learning.

Besides neural networks, there are other popular machine learning algorithms including support vector machine, linear regression, and singular value decomposition, often combined with one another to implement complex AI tasks. The prevalence of AI applications depends heavily on the rapid advancing of semiconductor manufacturing and sensor technology in the electronics industry, due to increasing demands for faster computing speed, larger memory capacity, and higher data resolution in hardware devices running the programs.

Machine learning has profoundly changed the way computers work for humans. A wide range of industry sectors are revolutionized with improved automation, adaptivity and efficiency, and new forms of intelligent products and services are emerging. A few representative applications are listed below to demonstrate its impact.

The advent of digital imaging allows computers to process visual data and extract meaningful information. For example, face recognition has been used in video surveillance, photo tagging, and to track terrorists, criminals, fugitives, and missing persons. On August 16 of 2019, New York City Police Department used face recognition tools to pin down a man who left a pair of rice cookers in the Fulton Street subway station. The program compared images of the suspect from security camera footage to mug shots in the NYPD’s arrest database, and presented selected ones for human review, taking only one hour for NYPD to identify the suspect [5].

Virtual reality takes computer vision technology to a new height. It has been applied in healthcare, education, archeology, architecture, gaming, and sports training to provide users with immersive experience. In 2022, the UK based fitness platform company Rezzil announced its first Oculus VR headset that will let users interact with Manchester City soccer players and participate in virtual drills inside a digital recreation of the club’s Etihad Stadium [6].

From auto text to voice control, natural language processing builds statistical models to determine the probability of a given sequence of words in structured sentences. A language model can also be used as a biometric template to synthesize text or speech by a person, and to discriminate the true authorship from imposters. In 2005, a group of students at MIT’s computer science and artificial intelligence lab developed a computer program named “SCIgen” that randomly generates computer-science papers with realistic-looking graphs, figures, and citations. One was even accepted as a non-reviewed paper by a publisher [7]. Other than creating nonsensical articles, DeepMind researchers introduced in 2022 an AI film authoring tool “Dramatron” capable of writing meaningful film scripts with structural context and cohesive dialogue [8].

A more recent sensational AI story was unveiled in November 2022 when the American artificial intelligence research laboratory OpenAI launched its chatbot ChatGPT (Chat Generative Pre-trained Transformer). ChatGPT employs a natural language processing technique to understand the context from written input and answer user questions with a high level of accuracy, including assignments and exams given to K-12 and college students. Two months later, the research team trained another language model to classify the text as being written by a human or by a chatbot [9].

Recommendation engine is an online marketing tool integrated into e-commerce websites to up-sale and cross-sale products to customers, and eventually to increase order values. Amazon recommendations are based on a collaborative filtering algorithm to predict items of interest to customers learned from their purchase and browsing history, items in the shopping cart, and items they are currently browsing on the website, such as “customers who bought this item also bought”, “related to items you’ve viewed”, “frequently bought together”, “this week’s best sellers”, etc.. Both on-site recommendation and off-site recommendation via email are evaluated as being effective in boosting customer conversion rates. According to a McKinsey survey, use of recommendation engine technologies is responsible for an average annual growth of 20% sales and 30% profits in a business [10].

An autonomous vehicle is equipped with sensing devices to collect environmental data based on which its machine learning algorithm predicts an optimal motion for the vehicle to follow. In 2014, Tesla introduced Autopilot as an advanced driver-assistance system embedded in its electric cars with low-speed automatic parking and summoning functions. An upgraded version Enhanced Autopilot featuring lane changing and navigate on highways was announced in 2016. By 2018, the vehicle can adjust acceleration at low speeds when an obstacle is detected in the path of travel. The system’s beta test targeting full self-driving capability started in 2020 [11]. Besides transportation, autonomous vehicles reduce labor and risks for tasks to be performed in difficult situations. From vacuum cleaners to Mars rovers, from air quadcopters to underwater drones, robotics and autonomous systems are considered essential technological components in the fourth industrial revolution.

A plethora of other AI applications are developed based on machine learning, virtual assistant in customer service, fraud detection in online banking systems, automated transaction in eBay bidding and stock trading, protein structure prediction in drug discovery, and so on. Estimated by PwC, AI could increase global GDP by up to 14.3% by 2030, equivalent to a contribution of $15.7 trillion, and become the biggest commercial opportunity in economy outlook. Retail, financial services and healthcare are expected to harvest the largest sector gains [12].

Like other computer technologies, machine learning has its own limitations and vulnerabilities. Among them are safety, fairness, and academic integrity. In March 2018, when an Uber experimental Volvo SUV was operating in autonomous mode on a test route in Tempe, Arizona, its object classification algorithm failed to detect a cyclist for the vehicle to slow down in time, causing the world’s first fatal crash by a self-driving car [13]. Safe operation in high stake tasks remains a challenge in designing autonomous systems. Moreover, machine learning models trained with human generated data will inevitably inherit the conscious and unconscious biases possessed by the sample group. Scarcity of samples in partial classes also skews the training result. In 2018, researchers from MIT and Stanford University conducted experiment with three commercially released face recognition programs and reported the 0.8% error rate in identifying light-skinned men vs. 34% error rate in identifying dark-skinned women [14]. Acquisition of comprehensive training dataset with sufficient diversity and with reduced bias, misinformation, disinformation, unethical and unlawful information, is another challenge to achieve fairness for public good.

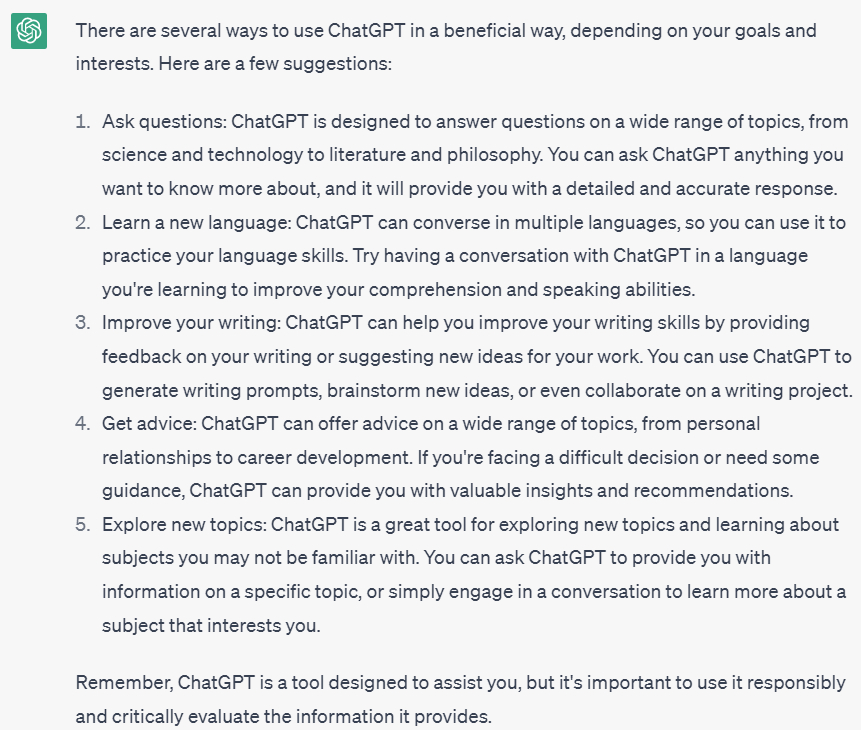

In December 2022, one month after ChatGPT was made publicly accessible, a college professor in South Carolina caught a student using this tool to generate an essay for his philosophy class. Reports of this incident sparked national debates on how AI tools should be properly used in academia. Educators are concerned that students could use this technology in plagiarism, while acknowledging its powerful text processing ability and positive effects in assisting learning and teaching [15]. On April 18 and April 20, Hunter College hosted two ChatGPT workshops to discuss the ways AI is disrupting traditional pedagogical practices and faculty are beginning to adapt to the changing landscape of higher education. A professor in our MEC department shared his experiment of asking math questions and not getting correct answers from ChatGPT, proving the immaturity of its algorithm and the influence of language structure. Another English professor from City College recommended use of this tool to explore resources for study topics. ChatGPT has its own suggestions on using itself in a beneficial way:

Figure 3: ChatGPT responses on using itself in a beneficial way

Finally, job displacement and AI overtaking human intelligence are two frequently discussed issues. As stated in a 2017 McKinsey report, occupations requiring college degrees and higher are less susceptible to job displacement by automation than those requiring secondary education or lower. Future workers will spend more time on interpersonal and creative activities involving logical reasoning, social skills, and cognitive capabilities unmatchable by machines, and will spend less time on predictable and repeatable activities where machines outperform humans [16]. In 2018, researchers from the MIT Initiative on the Digital Economy pointed out that all occupations will be affected by machine learning, and none is likely to be completely replaced by it. The optimal way to utilize machine learning is to rearrange the constituent tasks in a job so that some tasks are handled by machine, and others by a human [17].

Despite the breathtaking accomplishments by incorporating machine learning, AI systems follow instructions designed by humans. They are not sentient beings aware of themselves. As Google CEO Sundar Pichai commented in a recent interview with 60 Minutes, “AI will be as good or as evil as human nature allows” [18].

[1] IBM, What is machine learning? Online available: https://www.ibm.com/topics/machine-learning

[2] AlphaGo, https://www.science.org/content/article/ai-protein-folding-our-breakthrough-runners

[3] Bishop C. M., Nasrabadi N. M., Pattern Recognition and Machine Learning. New York: Springer, 2006.

[4] Weitzenfeld, A., Arbib M. A., Alexander A., The Neural Simulation Language: A System for Brain Modeling, the MIT Press, 2002.

[5] Craig McCarthy, How NYPD’s facial recognition software ID’ed subway rice cooker kook, https://nypost.com/2019/08/25/how-nypds-facial-recognition-software-ided-subway-rice-cooker-kook/

[6] Player22 – a collection of athlete designed training drills, https://rezzil.com/player-22/

[7] Adam Conner-Simons, How three MIT students fooled the world of scientific journals, https://news.mit.edu/2015/how-three-mit-students-fooled-scientific-journals-0414

[8] Dramatron – an interactive co-authorship script writing tool, https://www.deepmind.com/open-source/dramatron

[9] ChatGPT, https://chat.openai.com/

[10] mckinsey.com, Targeted online marketing programs boost customer conversion rates. Online available: https://www.mckinsey.com/capabilities/growth-marketing-and-sales/how-we-help-clients/clm-online-retailer

[11] Tesla Autopilot, https://www.tesla.com/support/autopilot

[12] Anand Rao, PwC’s Global Artificial Intelligence Study: Exploiting the AI Revolution. Online available: https://www.pwc.com/gx/en/issues/data-and-analytics/publications/artificial-intelligence-study.html

[13] Lauren Smiley, 'I’m the Operator': The Aftermath of a Self-Driving Tragedy, https://www.wired.com/story/uber-self-driving-car-fatal-crash/

[14] Larry Hardesty, Study finds gender and skin-type bias in commercial artificial-intelligence systems, https://news.mit.edu/2018/study-finds-gender-skin-type-bias-artificial-intelligence-systems-0212

[15] Yang, J., Zahn, H., Educators worry about students using artificial intelligence to cheat. Online available: https://www.pbs.org/newshour/show/educators-worry-about-students-using-artificial-intelligence-to-cheat

[16] James Manyika, Susan Lund, Michael Chui, Jacques Bughin, Jonathan Woetzel, Parul Batra, Ryan Ko, and Saurabh Sanghvi, Jobs lost, jobs gained: What the future of work will mean for jobs, skills, and wages, https://www.mckinsey.com/featured-insights/future-of-work/jobs-lost-jobs-gained-what-the-future-of-work-will-mean-for-jobs-skills-and-wages#part4

[17] Brown, S., Machine learning, explained. Online available: https://mitsloan.mit.edu/ideas-made-to-matter/machine-learning-explained

[18] 60 Minutes, The AI revolution: Google's developers on the future of artificial intelligence. Online available: https://www.youtube.com/watch?v=880TBXMuzmk